Posted in: Pop Culture, Recent Updates, Technology | Tagged: elon musk, OpenAI

It's Alive? Elon Musk's OpenAI Tech Tells Me A Very Spooky Story

I've never been one to get too freaked out about the looming challenges and transformations that the world faces as we enter an era of sophisticated and widespread use of artificial intelligence. This is not to say that I don't believe that the rise of such technology will have a dramatic impact on all of our lives, because I certainly do think so. Always a student of both pop culture and technology, I chose where to go to college at least in part because the school's computing research reputation prompted an infamous fictional rogue AI to be born there in 2001: A Space Odyssey. I was far from the only one whose choice was swayed by this notion. We wanted to see if there was a ghost in the machine.

Turns out there are several. Yes, robots will come for our jobs. Deepfakes will transform the way we consume news yet again. AI-driven marketing campaigns based on sophisticated psychometric data will get vastly better at trying to sell us things and influence our politics.

We'll meet such challenges and figure them out because we must, it really is that simple. We've faced such unfathomable technological transformations before, and I was reminded of that earlier this week when I interviewed Eric Hayden of FuseFX over his work on the just-released Deadwood movie. The decade+ from the late 1870s to the late 1880s that the series and movie covers represents a startling technological change in the lives of ordinary people. In rapid succession came the telephone, the electric grid, the transcontinental railroad, and countless innovations built on top of those things and others. These changes were both exciting and scary, but above all: unstoppable. This was perfectly captured earlier on in the series with:

You cannot fuck the future, sir. The future fucks you.

The technological whiplash of that era even spawned its own AI concerns, when an inventor named Zadoc Dederick built a walking, steam-powered robot, sparking the American science fiction publishing ecosystem as a side effect.

Dangerous Transformers?

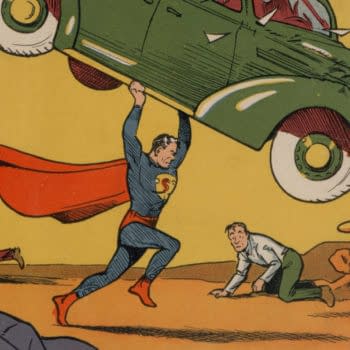

Catching up on some recent AI news recently, the notion of "The AI That's Too Dangerous to Release" from Elon Musk-backed OpenAI grabbed my attention. You probably recall that Musk is the man who manufactured and sold flame throwers for consumer purchase, so "too dangerous to release" in this context ought to be pretty good.

The details do not disappoint. OpenAI spent several months with a lot of computing power and an enormous dataset to develop a text generator they call GPT-2 ("Generative Pretrained Transformer 2):

Our model, called GPT-2 (a successor to GPT), was trained simply to predict the next word in 40GB of Internet text. Due to our concerns about malicious applications of the technology, we are not releasing the trained model. As an experiment in responsible disclosure, we are instead releasing a much smaller model for researchers to experiment with, as well as a technical paper.

Dangerous? I'll reserve judgment on that for the moment, though reading through that technical paper they mention, my eyes did widen a bit when I spotted the notorious Robert Mercer — who is of course well known for being connected to one of the matters I mentioned above — buried in the cites for some research work he was part of in 1980. Mercer did attend the same school that I did a couple of generations ahead of me, though I doubt it was because of 2001: A Space Odyssey. When it comes to rogue AI, rumor has it he's more of a Skynet fan.

In the README for the code itself, OpenAI does note that: "The dataset our GPT-2 models were trained on contains many texts with biases and factual inaccuracies, and thus GPT-2 models are likely to be biased and inaccurate as well," and while that's something short of Judgment Day, it's still an excellent point.

GPT-2 has been generating buzz for some of the clever uses experimenters have put it to so far, including writing up alternative endings for Game of Thrones, and the strange story of Ben Gilburt, who used GPT-2 to generate a novel.

The Dark Knight Transformed

Deciding to put GPT-2 to a small test myself, I jumped over to Talk to Transformer, and entered a starting prompt for the machine to work with. What sort of beginning sentence to use? The answer was obvious. What better place to start than with the sentence considered the very definition of terrible starting points for stories: the beginning of Edward Bulwer-Lytton's 1830 novel Paul Clifford: "It was a dark and stormy night."

The results of this starting point are wonderfully weird.

It was a dark and stormy night.

But we're alive!

They're alive! They're always alive.

Our bodies are still there.

It was a dark and stormy night.

But we're alive!

They're alive! They're always alive.

My legs are still warm.

My feet are warm.

They're still there.

They're still there.

My legs are still warm.

My feet are warm.

They're still there-

A dark and stormy night.

But we're alive:

Now we're alive,

Now we're alive!

We're alive,

So we want you all to

You want us all

To join our club

With our own kind.

I want to join their club

With our own kind.

Ghost in the OpenAI Machine

I'm not sure what sort of club OpenAI's GPT-2 is referring to there, but I think I'm in. Sign me up. I'll admit it's a strangely appropriate passage following that opening line, whose author also penned a somewhat infamous 1842 occult novel called Zanoni which popularized the literary concept of the Guardian of the Threshold, an entity which blocks the unworthy from ascending the path to greater knowledge.

GPT-2 might not be so far from passing that test.