Posted in: Game Hardware, Games, Interview, Video Games | Tagged: Hyperreal, interview, Remington Scott

Interview: Chatting With Hyperreal Founder Remington Scott

Something that's been making headway recently with new technologies is how personal likenesses will be used down the road. With deep fake videos now becoming a bigger deal, how one's likeness is being used for various types of media is becoming a bigger concern. However, some are moving forward with tech to now only assure your likeness is protected, but that it is properly captured and managed well so that you get the best version of it to be used across multiple platforms. One of those companies is Hyperreal, which has been working with a number of celebrities and artists to get the most out of the new tech, such as last year's music video from Paul McCartney and Beck in which McCartney's likeness from the '60s was used throughout the video. We had a chance to chat with the company's founder, Remington Scott, about the work they've been doing as well as his own career in gaming and tech.

BC: Hey Remington, how have things been for you lately?

Remington: Outstanding! I say this on behalf of the team at Hyperreal and our partners as we're all unlocking achievements we've been pursuing for years, decades even. My job involves transforming imagination into Hyperrealities. It's incredibly fulfilling to live at a time when we can accomplish giant strides forward in Hyperreal simulations of reality. Day-to-day I look forward to the camaraderie the Hyperreal team enjoys as a growing community of industry-leading innovators and creators working to realize their potential. Every day is a new opportunity to fulfill your dreams at Hyperreal and I'm thankful for this.

For those unfamiliar with your work in gaming, how did you get involved and how has your career evolved over the years?

I spent my early teen years playing first-generation 8-bit console games and dropping quarters at arcades. Video games were my first love and I came of age during a renaissance of first-generation console games. In high school, I was fortunate to co-create my first video game using innovative tech to digitize images from video. This process resulted in an experience that would be acclaimed as the first digitized home video game. Using digitized animations for games resulted in a new business model. We were producing video games monthly that featured realistic live-action imagery compared to much larger and well-funded game developers that were struggling with exceedingly longer development times, cost overruns, and crude underwhelming hand-drawn animations.

Fast forward several years- digitized images in games had become commonplace. The term "Multimedia" was coined to represent interactive digitized videos. The largest publisher of digitized video games, Acclaim Entertainment, (i.e. Mortal Kombat), had built their corporate HQ in the next town over (in Long Island, NY) and I was directing digitized action in their green screen studio. The digitized animations were then sent to Acclaim's worldwide network of game developers. I was an Interactive Director in an extraordinary video game publishing empire producing dozens of titles based on high profile IP's per year before the age of 25.

How did you first get into motion capture and what's it been like seeing that technology grow?

Acclaim's contributions to the motion capture industry provided a significant growth and leadership position from their $10m+ investment in building the world's first motion capture studio for entertainment. People generally don't think about innovations like how motion capture became a new kind of animation. The fact is, motion capture was not created for entertainment. Motion capture had been developed in medical labs in which a subject would walk on a treadmill surrounded by an array of cameras that record the biomechanics of the rotation of the ball of the femur in the socket of the hip for hip replacement surgery. This was called Gait Analysis. In these settings, there was no need for full body biomechanics or animation tools. Therefore, when Acclaim adapted the tech to build an infrastructure to drive the motion of their digital 3D characters in video games it took years of trial and error to continually develop a platform that would be able to drive animation in entertainment.

More than just a system to capture motion, Acclaim developed an industry-defining animation platform featuring the first multi-person large-scale capture zone. Real-time capture and re-targeting were now possible and motion editing tools were invented as some of their many innovations that have become canon. This is where the first realistic moving interactive digital humans came to life. My role at Acclaim was to bridge the gap between Product Development and the Advanced Technology Group, directing how digital humans came to life and building production methodologies within a new technology framework that would become guiding industry principles. The results could be seen in one of my most successful games Acclaim produced using motion capture, Turok: Dinosaur Hunter on N64.

I imagined a future in which digital humans in games were just the start and believed Hollywood films would soon showcase hyperrealistic humans coming to life via motion capture. However, the reality was the opposite. Hollywood did not want to take a chance on a new and different form of animation. Hollywood animators refused to use the tech. A century of traditional keyframe methodology was an obstacle that was deeply entrenched. Animated films even put disclaimers at the end of their movies citing "100% Genuine Animation, No motion capture or any performance shortcuts were used." I didn't give up. I believed it would be a matter of time before this new kind of animation would disrupt traditional Hollywood animation. However, rather than an existing film studio, the shot across the bow would come from the video game goliath, Square. After their phenomenal successes in creating the immersive worlds of the Final Fantasy video game franchise, Square built a movie studio comprised of top international talent to produce the first hyperrealistic animated film. The key differentiator on this animated film was using motion capture to create a more realistic human performance..

At Square, I was the motion capture director for the Final Fantasy film. The result made history as the first theatrically released film to use motion capture for principal performers to bring hyperreal digital humans to life. Hollywood took notice, but it wasn't enough to effect change. I was looking for an opportunity to showcase the tech in something so outrageously popular that motion capture would undeniably be viewed as a prime innovation in 21st-century filmmaking. The key was to empower the right performer with cutting-edge motion capture tools and methodologies to bring to life one of the most mesmerizing digital human performances in cinema. Enter Andy Serkis and The Lord of the Rings. I supervised motion capture at Weta Digital and architected their performance capture pipeline which resulted in Weta winning an Academy Award for "using a computer motion capture system to create the split personality character of Gollum and Smeagol" for The Lord of the Rings: The Two Towers.

What made you decide to start your own studio with the launch of Hyperreal?

In the years that followed The Lord of the Rings, I was fortunate to have been a supervisor in the digital human team at Sony Imageworks on blockbuster feature films including Academy Award-winning VFX for Spider-Man 2. I also authored several patents including a system to read brain waves that drive facial animation via bio-electrical signals and the now ubiquitous head-mounted facial camera system. I was on the team that identified ICT's Lightstage technology as an important tool for recording high-fidelity visual human data and use it on a feature film and then subsequently showcase a lightstage digital double of Doc Ock in real-time powered by the Playstation 3 at the launch event. In film, the giant strides we made in the early days were replaced with intense focus on small details. Having attained a level of 'maximum resolution' in the details of a digital human, I then focused on my next challenge- going back to the video game industry with a new product—feature film quality digital humans in real-time.

Call of Duty, Just Cause, Assassins Creed, Resistance, Spider-Man, and Kill Zone were some of the franchise games I directed action for. Each game I worked on was another opportunity to learn from top industry artists about their points of friction and understand how realistic real-time digital humans could be integrated into developers' pipelines. Virtual Reality and Augmented Reality opened new commercial opportunities and I developed content for both platforms, showcasing hyperrealistic digital humans and performances in these new mediums which resulted in recognition by the Advanced Imaging Society for Best Augmented Reality Experience in an interactive global activation for Netflix.

These events led to solutions to several key technical obstacles I felt we needed to address before starting Hyperreal. First, I was now confident that a high-fidelity Hyperreal digital human product could be interoperable across key entertainment platforms, from film to video games. We call this the HyperModel and the internal system that brings it to life is HyperDrive. Next, industry tech giants such as Nvidia and Unreal had processing that can visualize hyperrealistic rendering features such as ray-tracing in real-time. And machine learning and artificial intelligence were unlocking technological innovations that would increase emotional realism and drive scale in a meaningful way.

Removing these technology barriers allowed me to focus on the first principles for starting Hyperreal- I want to empower exceptional talent to create outstanding metaverse entertainment. At the heart of Hyperreal's entertainment experiences are ageless 3D digital double HyperModels who are unlimited in their performance potential, disrupting the limitations of being human in an age of virtual convergence. A timeless 3D HyperModel is the future of talent intellectual property and their entertainment empire which will create endless new experiences for generations of fans.

Why 'hyperreal,' why not 'characterizations' or 'cartoons'? I believe hyperreal simulations of reality bring us closer to emotional, psychological, and physical human connections, which provide deeper human responses than cartoon characterizations. I couldn't help but think, looking towards the future, if technology was not a limiting factor and anything was possible, would you prefer a hyperrealistic experience or a cartoon? I felt like I've seen enough cartoons and wanted to be a part of something different, a worthy challenge that can continually unite the most exciting innovations in computer imagery and build upon them. This is an endeavor that will happen and Hyperreal is building the team to contribute to this future. Quality is my obsession. Everything I do is focused on quality first. Everyone on the Hyperreal team echoes and amplifies this belief. I am most interested in pushing the boundaries of possibilities. We are now in a new frontier of realizing imagination beyond the human experience.

What recently pushed you toward working with celebrities to capture their likeness?

Some of the actors I digitized included Anthony Hopkins, Angelina Jolie, Jackie Chan, Tobey Maguire, James Franco, John Malkovich, Robin Wright, Will Smith, Ray Winstone, Billy Crudup, Thomas Haden Church, Andy Serkis, Fan Bing Bing, and Kevin Spacey. Over the years digitizing celebrities has become a process that occurs again and again at the beginning of each production, whether it's for a feature film, virtual experience, or video game. Many times while scanning, talent would ask "How do I own this?"

Traditionally, each time talent is scanned and an avatar is created this uses a very large portion of the project's budget and time to create. The process is inefficient, costly, and time-consuming for one-time usage. The resulting digital asset can not be re-used under strict image rights and only works in the infrastructure of the VFX house it was created. What I just described is the existing business model that Hyperreal is disrupting. Hyperreal is building the HyperModel for talent so they can own their interoperable intellectual property technology asset and re-use it across unlimited future uses. The fact that talent does not own the most valuable digital asset of their career needs to change. Talent can greatly expand upon their intellectual property, influence, performances, and engage with fans across all languages with their Hyperreal HyperModel.

How important is it in this current age of media for people to own the rights to their likeness as a digital asset?

Hyperreal's primary business is to empower exceptional A-list talent and A-list brands. We are in an age where unlimited new possibilities in expanding digital Metaverse worlds will enable talent to build a strong leadership presence in the new market space of the largest economic opportunities of this century. The greatest problem facing talent that wants to explore new Metaverse opportunities is that they currently do not have a HyperModel. With a HyperModel, talent now has a digital counterpart that can explore and develop unlimited new digital opportunities. It's the newest tool in talent's toolbox that can allow them to 'scale' as fast and big as they want across Metaverse opportunities and beyond.

Owning a 3D HyperModel with corresponding copyrights is a step in protecting and securing the digital rights ownership and control in the metaverse and beyond. It is very important for celebrities to be aware of the value of their image rights and how to protect them. Some celebrities have obtained a trademark registration for their image and other representations. We believe that owning a HyperModel ensures that no one can profit from the use of a celebrity's likeness without a license across new visual and interactive platforms. The HyperModel is an authorized extension of talent and can be treated as a digital one-of-a-kind copyrightable asset. As the HyperModel earns revenue the digital asset's worth increases along a trajectory that may provide greater valuations than music rights and catalog sales which we are currently seeing fetch exorbitant prices.

What's the process you go through in capturing their likeness so that they can fully own the rights to it?

There are three components for capturing and preserving the human performance 'source code' which is commonly referred to as digital DNA: 3D image, motion, and voice. Hyperreal's process is proprietary and evolving with technological innovation. However, the basic idea is that talent is recorded in a custom HyperScan system that features an array of high-fidelity cameras and lights that are in sync. Talent performs a customized template of facial muscle actions which provide the muscles of the face to be uniquely identified for control using the HyperDrive system. There is a new process that we are testing in which our HyperScan can record the shape of the face in motion up to several hundred samples per second resulting in extremely fast facial capture sessions and a massive amount of data, up to a hundred terabytes per session. The result is a digital human unlike any you've seen before and the beginning of a new level of human emotion and fidelity.

The HyperScan session usually takes anywhere from 30 minutes up to a few hours at most, depending on the make-up and wardrobe changes. We also have talent perform a series of vocalizations that allow us to ensure we have their voice digitized for any future use in AI or de-aged performances. And finally, we record their signature motions using motion capture. How they move in relation to how they speak and perform is another part of the larger picture that we believe will be driven by AI in the near future. The digital DNA data is owned by talent. It is the core of all the innovations we're building on and is the exact digital image, voice, and movement of talent. We blockchain encrypt and securely vault the digital DNA data for talent and copyright for protection.

It's important to note that Hyperreal's infrastructure is based on a 'fluid technology' concept that flows through all our HyperModels. What this means is that the HyperModel technology will continually improve and update over time, ensuring that the latest innovations are being implemented into future use.

Once they have that likeness, what are they able to do with it and how do they go about making sure others can't just use their own version?

Hyperreal then converts all the digital DNA data into a 3D digital human called a HyperModel. The HyperModel asset can perform in real-time in an interactive experience such as in virtual productions and XR platforms or can be traditionally rendered in a manner that is appropriate for feature films. So there is a lot of latitude for possibilities. We're just scratching the surface, but it seems like for almost everyone we work with there is a different use for their HyperModel. Most traditional examples were digital doubles in feature films. Recent examples of HyperModels have been featured in interactive and social engagement, fashion and music videos, branding, artificial intelligence communication, and experimental entertainment methodologies with the goals of unlocking new business models and scale.

We're in discussions with a skincare company that wants avatar simulations of their product's results on various photorealistic synthetic brand faces. We're talking about HyperModels as brand ambassadors and influencers for corporations, synthetic virtual music artists, metaverse worlds, and interactive games. Even popular cable networks want to stream A.I. hosts and virtual assistants. Across all these verticals we haven't seen any limitations yet. We'd be happy to hear some of the challenges that your readers have come across and see if we can help address solutions. Hyperreal utilizes security protocols and blockchain encryption to protect the assets. We've worked with government military contractors in the past and have several levels of protection.

What people have you worked with so far and where has this been used that people can see?

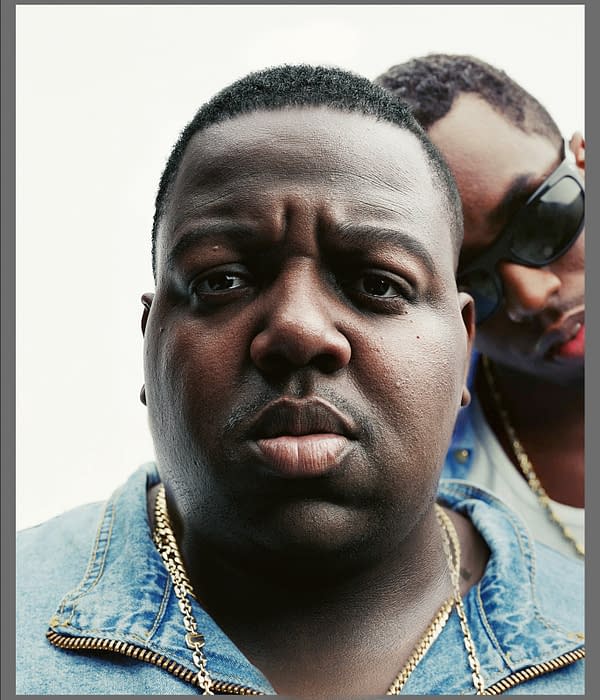

Brands and talent we've worked with include influencers, actors and musicians representing fashion clothing brands, such as Steve Madden, in a series of animated Instagram posts. On screens outside stadiums, soccer legend Leo Messi's HyperModel, using a custom Artificial Intelligence brain, interacted with fans asking them questions about his life to find his greatest fan. The creator of American Idol, Simon Fuller, worked with Hyperreal to bring to life an intergalactic virtual music star, Alta B, who appears in a new music video garnering over 50mil views in days, then she has appeared on a video comm platform to interact with her fans. We've worked with one of the world's most influential pop artists, Paul McCartney, as Hyperreal co-produced his music video "Find My Way" featuring Beck in which he was de-aged. The Notorious BIG has been digitally reborn to perform for his fans again both in live concerts and in a metaverse world representing Brooklyn from the 1990s.

Virtual Concerts utilizing video game engines are the new venue to transform how music artists are now able to connect to over 2 billion gamers. For Sony's new Immersive Music division Hyperreal created the HyperModel of Epic recording artist Madison Beer. Her digital HyperModel performed in a virtualized Sony Music Hall in an experience that is completely rendered in Unreal engine and features Madison's HyperModel performing in fire, rain, through a galaxy of stars and fireworks. The event was first ray-traced on the cloud and pixel-streamed as a real-time interactive concert on mobile devices through Verizon's 5G initiative. Then the event was seen in Virtual Reality and finally, it premiered as a one-time live stream to the public as a virtual concert event on TikTok. It currently lives on YouTube. Madison performed in motion capture once, however, her virtual concert can continue to live across multiple platforms engaging in vastly different ways.

We have a number of very significant music artists, from all generations, that Hyperreal is working with on creative treatments to empower them to engage with their current and future generations of fans in ways they could never have achieved before.

Do you foresee this being a thing everyone ends up doing down the line, or is it going to be a case-by-case basis?

I believe everyone will have some form of avatar that may live in some version of the metaverse. A great example is Steven Spielberg's feature adaptation of Ernest Cline's Ready Player One. The OASIS is populated by all kinds of avatars, however, it is only at the very end of the film (SPOILER ALERT) that Parzival meets Gregarious Game's creator James Hallliday. Parzival is confused—he knows Halliday is deceased but he's not sure because he's talking to a perfect simulation of Halliday unlike all other avatars in the OASIS. Halliday stands out from the other avatars as more human. Alive. Real. Similarly in Neal Stephenson's seminal "Snow Crash" avatars take many different shapes and sizes. However, only those with significant computing power can look hyperrealistic.

These visionaries describe the variances in the spectrum of different kinds of avatars because, as with all things, there is a bell curve in quality and performance with the majority in the middle of the curve and at the very end of the curve sits the most extreme. We strive to represent the extreme on one side of this spectrum, a level of reality beyond what we perceive in the physical world as real. This is Hyperreal. We believe the products and innovations we create have a higher value because they are unique. We strive to be the ultimate driving machine for a human to slip into the skin of their HyperModel and perform inside the virtual worlds of the Metaverse. Hyperreal is engineering digital humans on a level that we believe is the high watermark for quality metaverse entertainment and beyond.

Hyperreal has developed three distinct technology silos for our HyperModels. GEMINI is the term we use for creating an exact digital double of the talent we're partnering with. It literally stops time at the current age of acquisition. This is the most straightforward process and we highly recommend every performer to start here. The FOUNTAIN technology is the term we use to de-age talent which results in a timeless hyperrealistic digital version of themselves. We engineered this technology as an additive to the GEMINI acquisition. PHOENIX is a stand-alone technology that we use when we don't have the capabilities to scan talent because they are deceased. This is the most time-consuming process.

Hyperreal digital humans have three classifications of HyperModel. The HYPERION is our flagship digital human class which represents our commitment to excellence in our partnerships with the greatest talent and performers in the world. Remarkable. Distinct. Supreme. This is the ultimate digital human asset class and is meant for high-fidelity emotional connections. The CENTURION is Hyperreal's class of digital humans when action speaks louder than words. CENTURIONS are less time-consuming to build, are budgeted at lower rates, and can be upgraded to HYPERION. Both of these classes are bespoke for A-list talent and performers. Recently, due to the demand for scale for a broader range of high-fidelity humans that can be available to the general public, we are developing a road map for the CIVILIAN class.

What projects are you currently working on where we can see more of this tech be applied?

The nature of our work with celebrity talent is that we serve as a part of their outreach, performances, branding, philanthropy, and marketing. Due to this there are timed role-outs and 'reveals' that we can't discuss until they are publicly disclosed. Therefore it is difficult to talk about the upcoming slate of artists Hyperreal is working with and the projects we're creating. I'm personally excited about innovative Web3 and NFT strategies for new engagement platforms we're building that support and nourish international communities of fans to participate more with their favorite HyperModel's. We're very excited about how Web3 and NFTs provide digital assets with value, which is our core mission, however, we're also ultra-conservative as we make sure that our projects have meaning and purpose so as to stay clear of the volatile nature of schemes and fads. High-quality projects centered on engagement and value exchange with the fan community is core to the success of our web3 and NFT strategy.

We're looking forward to working with our partners in Korea including influential technology, entertainment, and media companies. Korea is a leader in the acceptance of new technology concepts in digital human infrastructure. We're excited about our global expansion and partnerships. Hyperreal will continue to strive toward the highest standards of excellence for our partners and are very excited about where HyperModels are going to take us in the near future.